Their god is not our god

African AI researchers warn against Silicon Valley’s ‘artificial intelligence’ hype. They also talk of the imperative for Africans to build their own AI future.

Prompt this computer program to describe itself and it says it is “just like talking to a knowledgeable friend”.

Released by San Francisco-based company OpenAI in November, ChatGPT mimics human conversation, instantly responding to anything you type into its chatbox. It can tell you a joke and compose song lyrics; it can draft your presentation notes and offer relationship advice. You think of “it” but it refers to itself in the first person — “I”.

ChatGPT works seamlessly with OpenAI’s other flagship product: Dall-E, which can make any pictures and illustrations that you describe with text prompts.

Other tech companies, like Google and Facebook, have already released their own versions of this enormously powerful technology that promises to revolutionise the way that we work and communicate.

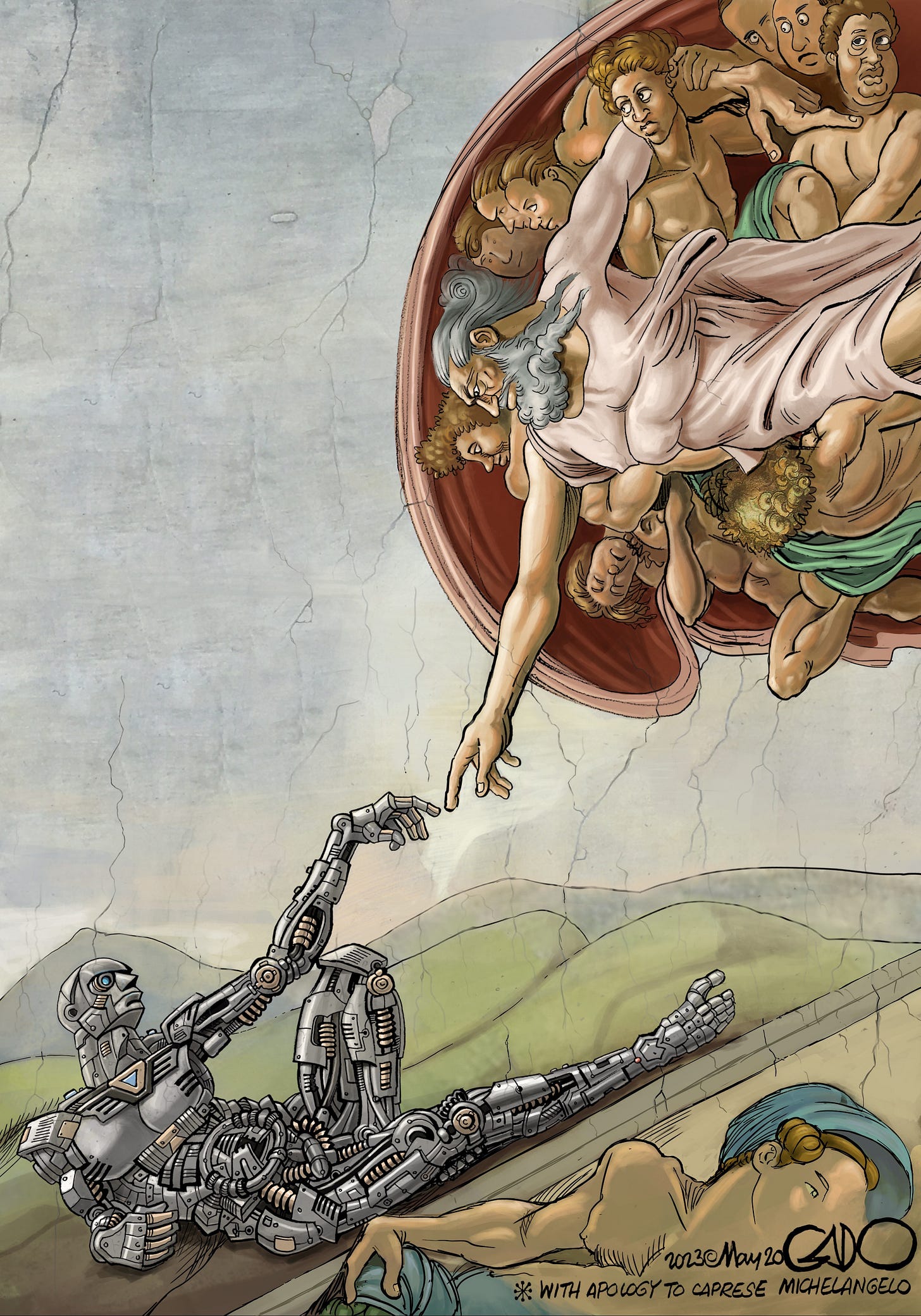

But its creators believe it capable of so much more than that. Branding their creations as “artificial intelligence”, they claim that it will eventually make machines smarter – much smarter – than humans.

“They’ve put the equivalent of an oil spill into the information ecosystem. Who gets to profit from it? And who gets to deal with the waste? It’s the exact same pattern as imperialism.”

“This technology could help us elevate humanity by increasing abundance, turbocharging the global economy, and aiding in the discovery of new scientific knowledge that changes the limits of possibility,” writes OpenAI’s chief executive, a 38-year-old American named Sam Altman, on the company’s website. “Our mission is to ensure that artificial general intelligence – AI systems that are generally smarter than humans – benefits all of humanity,” Altman said.

Climate cost, bias and deception

“It’s a god they are trying to build,” Ethiopia-born computer scientist Timnit Gebru remarks in an interview with The Continent. Gebru, whose work on bias in internet algorithms saw her co-lead Google’s Artificial Intelligence ethics team, is not too preoccupied by the “intelligence” of such software.

If anything, the programs in their current incarnation are more like sophisticated parrots – incapable of original thought.

That’s because programs like ChatGPT are trained on vast amounts of human words and conversation – much of it scraped without consent or respect for copyright from the internet, a form of intellectual property theft. When asked, the software can replicate the patterns and connections in those vast datasets, in a way that, to human eyes, can feel like “intelligence”. But the software is not thinking. It is merely regurgitating the data on which it has been trained – and its answers are entirely dependent on the content of that data (although it also has a tendency to invent false answers, which its creators use the human experience of “hallucinations” to describe).

Gebru has dedicated much of her career to highlighting the immediate risks posed by this new software. She and several co-authors wrote a paper in 2020 – which cost her her job at Google – that outlined some of these, including:

the extreme environmental impact (the energy used to train ChatGPT with a huge dataset of human language just once, could power 12,000 Johannesburg homes for a month);

the potential for inherent biases and discrimination (if the data is racist and sexist – as so much of the internet is – the outputs will be too); and

the potential for such models to deceive users (because they are so good at sounding like us, they can easily fool humans – even when the content is inaccurate or totally invented).

An African AI

In a co-working space in Johannesburg, a different vision for artificial intelligence is being pioneered. Lelapa AI is not trying to create one program to outsmart us all. Instead, it is creating focused programs that use machine learning and other tools to target specific needs. Its first major project, Vulavula, is designed to provide translation and transcription services for under-represented languages in South Africa. Instead of harvesting the web for other people’s data, Lelapa AI works with linguists and local communities to collect information – and then gives them a stake in future profits.

The Continent spoke with two of the company’s founders, Jade Abbot and Pelonomi Moiloa, last week. They share Gebru’s fears – as do a striking number of women, and especially women of colour, in the AI field.

“These programs are built by the West on data from the West, and represent their values and principles,” said Abbott, who notes that African perspectives and history are largely excluded from the datasets used by OpenAI and Google’s LLMs. That’s because they cannot easily be “scraped”. Much of African history is recorded orally, or was destroyed by colonisers; and African languages are simply not supported (speak to ChatGPT in Setswana or isiZulu and its responses will be largely nonsensical).

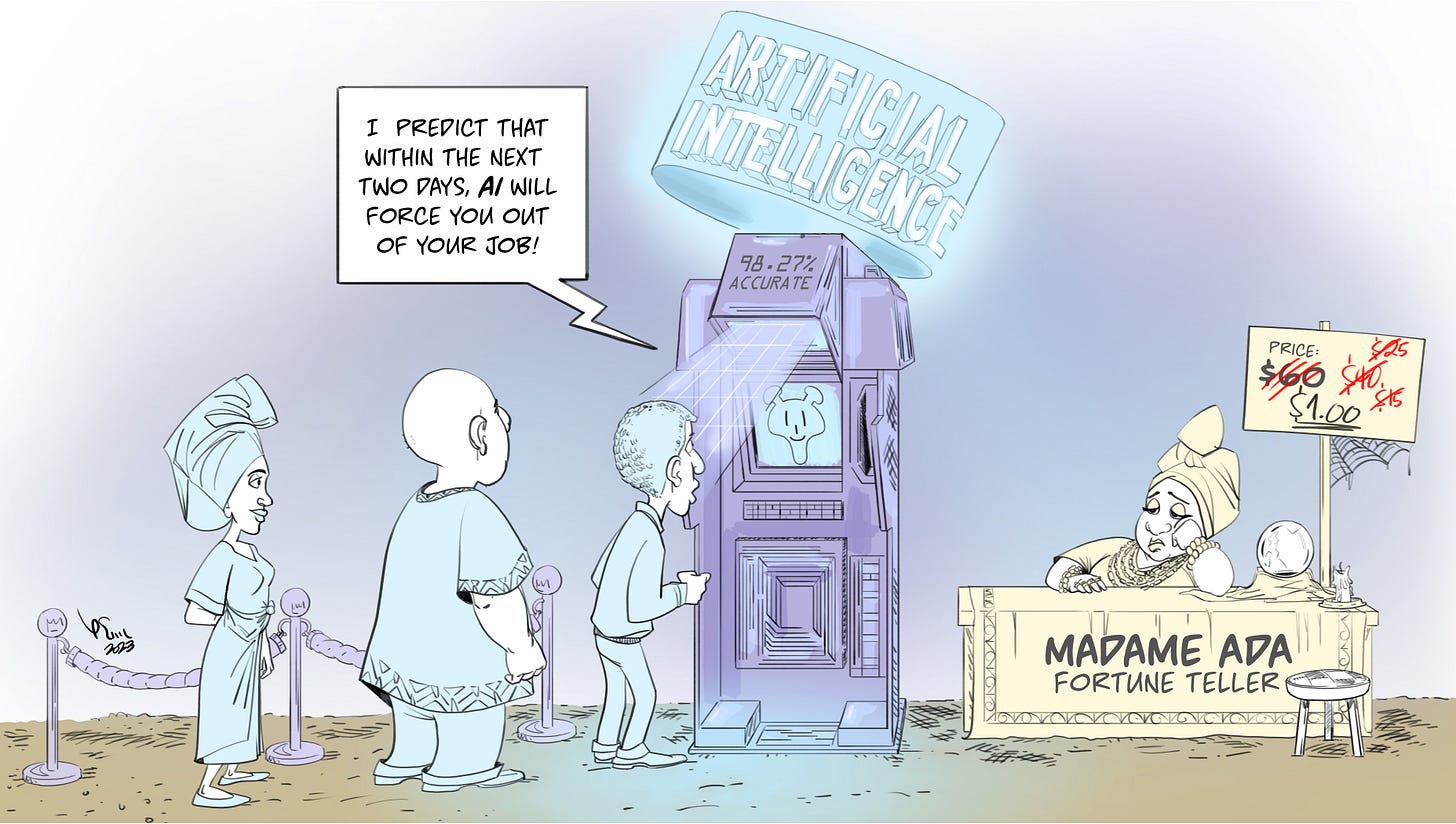

For Lelapa, this represents an opportunity. Because African data is so hard to access, OpenAI and Google will struggle to make its tools work effectively on the continent – leaving a gap in the market for a homegrown alternative. “The fact that ChatGPT fails on our languages … this is the chance for us to build our own house, before they figure out how to exploit us,” said Moiloa.

The consequences of failing to build our own house are potentially severe, she said – and all too familiar. “Data is the new gold. They will extract the data from us, create programs and then sell those programs back to us. And then all the profits flow out.”

Gebru tends to agree: “They’ve put the equivalent of an oil spill into the information ecosystem. Who gets to profit from it? And who gets to deal with the waste? It’s the exact same pattern as imperialism.”